Pcfruit (Proefcentrum voor Fruitteelt) aims to constantly discover new and existing technologies to add value to fruit growing by conducting research into sustainable fruit production methods. The Smart Growers project, for example, aims to stimulate the implementation of Smart Farming — a more sustainable way to practice agriculture powered by technology. In this specific case, pcfruit wanted to explore the capabilities of artificial intelligence applied to blueberry farming.

The aim of this project was to develop an object detection AI algorithm. With this solution, farmers can detect the number of blossoms appearing in camera images. As blossoms are a great predictor of future blueberries, the average yield could be derived from this number. Imagine: a farmer has a plot of bushes filled with 10.000 flowers, and, on average, 20% of blossoms do not turn into blueberries. We can estimate that the farmer will yield 8.000 blueberries.

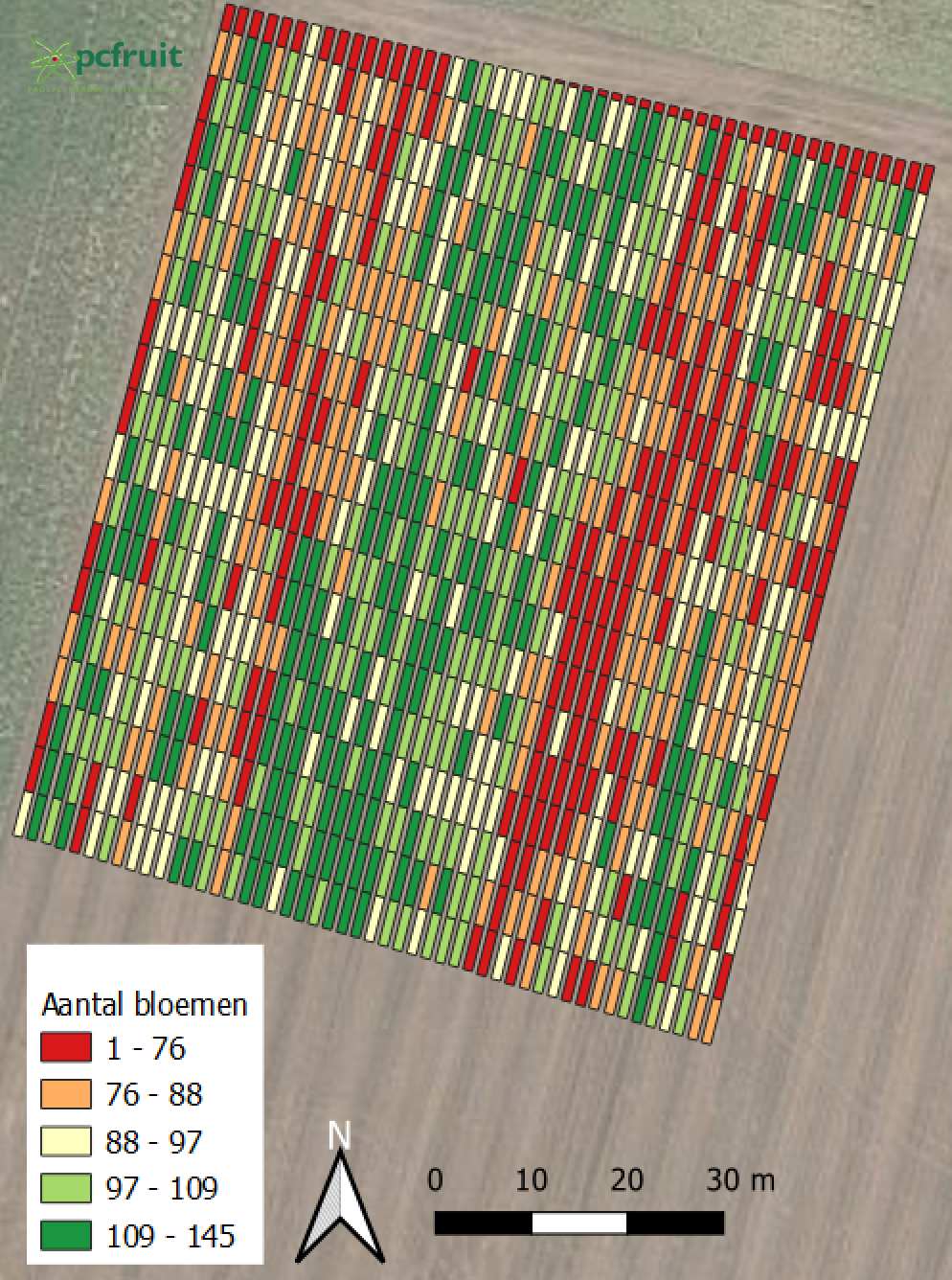

These estimations can be a convenient metric for blueberry farmers, as they can base several business decisions on this. For example, think of the number of blueberry pickers they need to hire to harvest the berries. Moreover, this will enable farmers to estimate the supply they can offer to auctions accurately. Lastly, they can use this information to generate a map of the field and look at where issues might occur. For example, if fewer berries grow in a specific part of the field, something could be wrong with the irrigation line.

Object segmentation

So, to be able to detect and count blossoms, we first needed to obtain the necessary video footage of the fields. For this, we relied on external expertise to set up the camera on the farmers’ equipment.

In capturing the necessary images, there were a few constraints we had to keep in mind. First of all, blossoms only bloom during one month per year — the other months, you will find blueberries or simply nothing. So, as the blossom period is relatively short, we only got a few takes to record the necessary footage, which proved to be a challenge.

Another constraint of this project was the need for more diversity in the data, as only one farmer recorded one day on one field. To ensure the quality of the images, we selected only usable frames, as only some parts of the recording were workable. For example, sometimes, the farmer would be in the frame, or the camera would film a part of the field without bushes. So, we carefully selected the useable frames and, in turn, uploaded them to Segments.ai, a data labeling platform. In this platform, every blossom pixel was segmented.

Training our AI models: detecting blossoms

After the labeling phase, we trained multiple AI models with the labeled data to see which would perform best. The winner was YOLO v6, a state-of-the-art object detection framework used for real-time object detection. It is a convolutional neural network trained on large datasets to detect objects in images and videos.

So, how did we train our models? Well, for every frame, the image is divided into cells, and for every cell, the model checks if the desired object is visible. While there are a few limitations that we will get into later, the results are already promising.

Precision — or ‘Which percentage of the predicted blossoms were correct?’ — was 90%, and recall — or ‘Which percentage of the blossoms were predicted?’ — was 55%. This means that we could find 55% of the blossoms on the field, but if we found a flower, it was 90% likely to be correct. This percentage might seem low, but due to the nature of the data, it is a good result.

I enjoyed our collaboration with Brainjar. Being in contact with the people effectively carrying out the technical part truly adds value as we speak the same language. The short communication lines ensured we could look for an optimal solution. - Joke Vandermaesen, Project Coordinator

The next step in generating a more accurate result could be using an additional depth camera to have more precise footage of the blossom rows. As mentioned, we had a minimal data set as we only got footage of one farmer, so more training data would make the models even more powerful. We are looking forward to move this proof of concept to a production-ready concept.

This research was part of the project “Smart Growers”, funded by the Interreg V program Vlaanderen-Nederland, with financial support of the European Regional Development Fund, VLAIO and the provinces of Limburg, Antwerp and East Flanders.